ETL Pipeline

Definition, use cases, and comparison to data pipeline. This guide provides definitions, use case examples and practical advice to help you understand ETL pipelines and how they differ from data pipelines.

What is an ETL Pipeline?

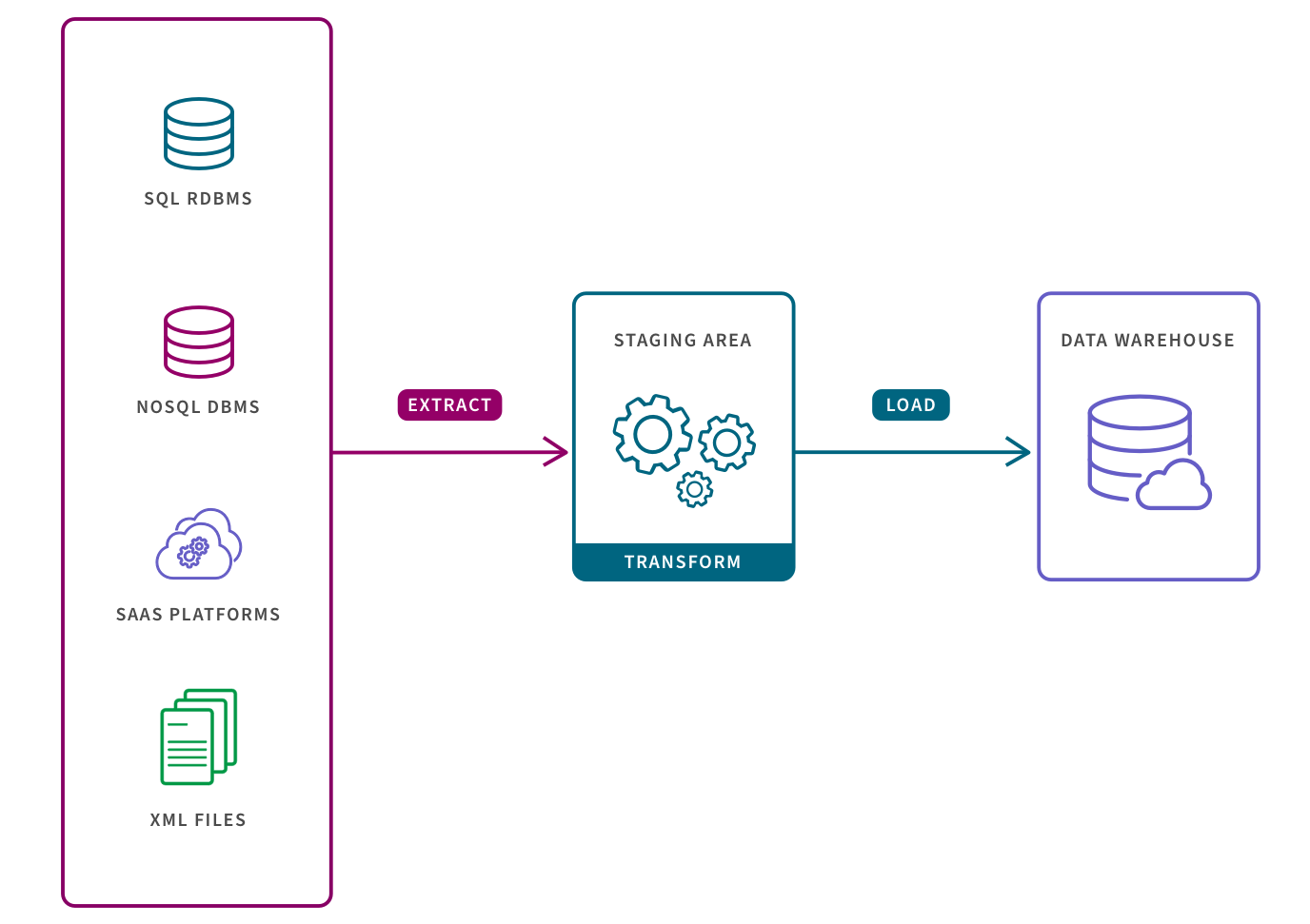

An ETL pipeline is a set of processes to extract data from one system, transform it, and load it into a target repository. ETL is an acronym for “Extract, Transform, and Load” and describes the three stages of the process.

What is ETL?

Extract, Transform, and Load describes the set of processes to extract data from one system, transform it, and then load it into a target repository.

- Extract: the process of pulling data from a source such as an SQL or NoSQL database, an XML file or a cloud platform holding data for systems such as marketing tools, CRM systems, or transactional systems.

- Transform: the process of converting the format or structure of the data set to match the target system.

- Load: the process of placing the data set into the target system which can be a database, data warehouse, an application, such as CRM platform or a cloud data warehouse, data lake or data lakehouse from providers such as Snowflake, Amazon RedShift, and Google BigQuery.

The ETL process is most appropriate for small data sets which require complex transformations. For larger, unstructured data sets and when timeliness is important, the ELT process is more appropriate (learn more about ETL vs ELT).

ETL Pipeline Use Cases

By converting raw data to match the target system, ETL pipelines allow for systematic and accurate data analysis in the target repository. So, from data migration to faster insights, ETL pipelines are critical for data-driven organizations. They save data teams time and effort by eliminating errors, bottlenecks, and latency to provide for a smooth flow of data from one system to the other. Here are some of the primary use cases:

- Enabling data migration from a legacy system to a new repository.

- Centralizing all data sources to obtain a consolidated version of the data.

- Enriching data in one system, such as a CRM platform, with data from another system, such as a marketing automation platform.

- Providing a stable dataset for data analytics tools to quickly access a single, pre-defined analytics use case given that the data set has already been structured and transformed.

- Complying with GDPR, HIPAA, and CCPA standards given that users can omit any sensitive data prior to loading in the target system.

Using ETL data pipelines in these ways breaks down data silos and creates a single source of truth and a complete picture of a business. Users can then apply BI tools, create data visualizations and dashboards to derive and share actionable insights from the data.

ETL or ELT?

Times are changing. Download the eBook to learn how to choose the right approach for your business, what ELT delivers that ETL can’t, and how to build a real-time data pipeline with ELT.

What is a Data Pipeline?

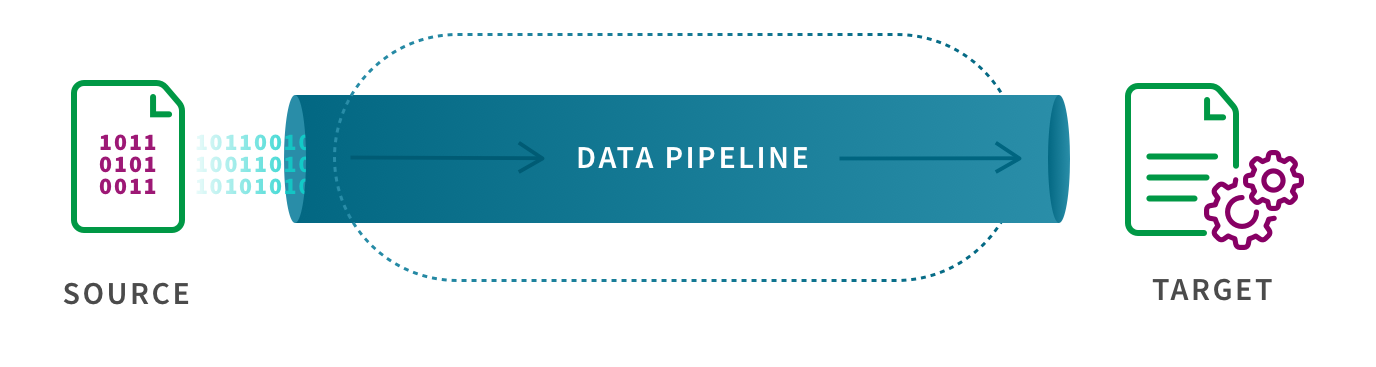

The terms “ETL pipeline” and “data pipeline” are sometimes used synonymously, but they shouldn’t be. Data pipeline is an umbrella term for the category of moving data between systems and an ETL data pipeline is a particular type of data pipeline.

A data pipeline is a process for moving data between a source system and a target repository. More specifically, data pipelines involve software which automates the many steps that may or may not be involved in moving data for a specific use case, such as extracting data from a source system, then transforming, combining and validating that data, and then loading it into a target repository.

For example, in certain types of data pipelines, the “transform” step is decoupled from the extract and load steps:

Like an ETL pipeline, the target system for a data pipeline can be a database, an application, a cloud data warehouse, a data lakehouse, a data lake or data warehouse. This target system can combine data from a variety of sources and structure it for fast and reliable analysis.

Learn more about data pipelines.

Data Pipeline Use Cases

Data pipelines also save data teams time and effort and provide for a smooth flow of data from one system to the other. But the broad category of data pipelines includes processes which can support use cases which ETL pipelines cannot. For example, certain data pipelines can support data streaming and here are examples of use cases based on data streaming:

- Enabling real-time reporting

- Enabling real-time data analysis

- Triggering other systems to run other business processes

Learn more about data pipelines.

Data Pipeline vs ETL

The terms “data pipeline” and “ETL pipeline” should not be used synonymously. Data pipeline is the umbrella term for the broad set of all processes in which data is moved. ETL pipeline falls under this umbrella as a particular type of data pipeline. Here are three key differences when comparing data pipeline vs ETL.

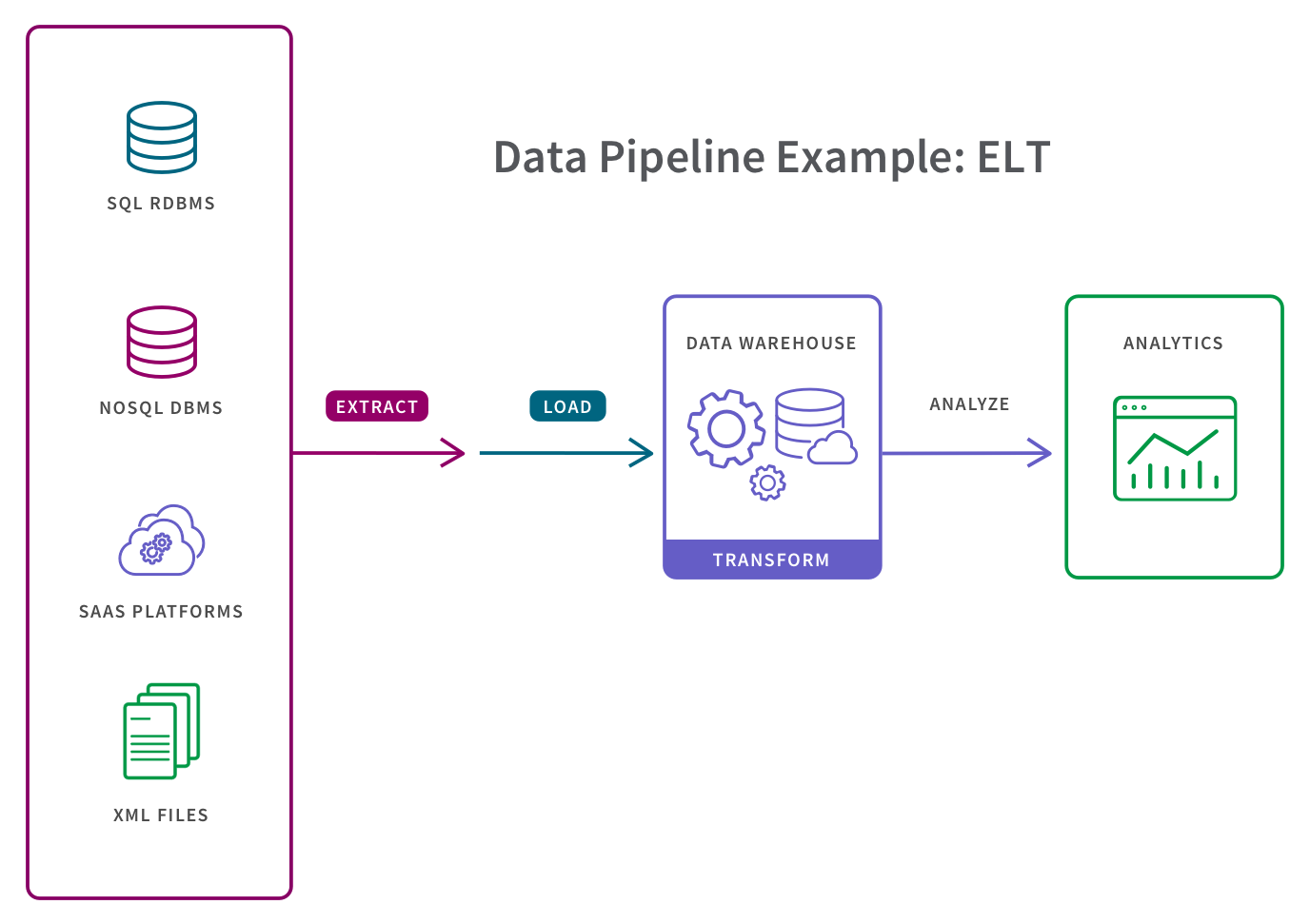

- Data pipelines don’t necessarily transform the data. As shown in the two illustrations above, data pipelines can either transform data after load (ELT) or not transform it at all, whereas ETL pipelines transform data before loading it into the target system.

- Data pipelines don’t necessarily finish after loading data. Given that many modern data pipelines stream data, their load process can enable real-time reporting or can initiate processes in other systems. On the other hand, ETL pipelines end after loading data into the target repository.

- Data pipelines don’t necessarily run in batches. Modern data pipelines often perform real-time processing with streaming computation. This allows the data to be continuously updated and thereby support real-time analytics and reporting and triggering other systems. ETL pipelines usually move data to the target system in batches on a regular schedule.

Accelerate analytics-ready data and insights with DataOps

-

Real-Time Data Streaming (CDC)

Extend enterprise data into live streams to enable modern analytics and microservices with a simple, real-time and universal solution. -

Agile Data Warehouse Automation

Quickly design, build, deploy and manage purpose-built cloud data warehouses without manual coding. -

Managed Data Lake Creation

Automate complex ingestion and transformation processes to provide continuously updated and analytics-ready data lakes. -

Enterprise Data Catalog

Enable analytics across your enterprise with a single, self-service data catalog.