Making transactional data available for analytics at the speed of change

-

Challenge

Data lake projects often fail to produce a return on investment because they are complex to implement, need specialized domain expertise and take months or even years to roll out.

As a result, data engineers waste time on ad-hoc data set generation and data scientists not only lack confidence in the provenance of the data, but struggle to derive insights from outdated information.

-

Solution

Qlik Compose™ for Data Lakes (formerly Attunity Compose for Data Lakes) automates the creation and deployment of pipelines that help data engineers successfully deliver a return on their existing data lake investments.

With the no-code approach from Qlik (Attunity), data professionals implement data lake creation in days, not months, ensuring the fastest time to insight for accurate and governed transactional data.

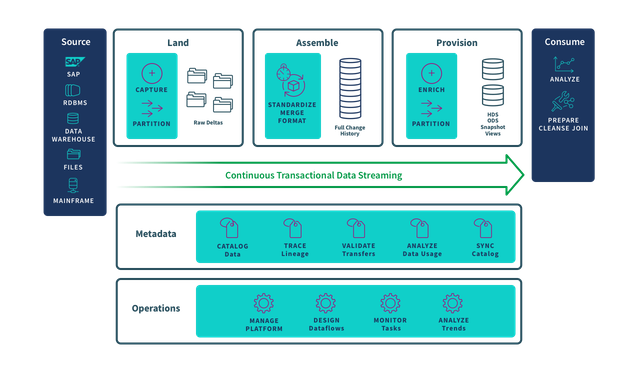

Multi-zone data lake

Data lake failures often use a single zone for data ingest, query and analysis. Qlik Compose for Data Lakes mitigates this problem by promoting a multi-zone, best-practice approach.

- Landing Zone – Raw data is continuously ingested into a data lake from a variety of data sources.

- Assemble Zone – Data is standardized, repartitioned and merged into a transformation-ready store.

- Provision Zone - Data engineers create enriched data subsets for consumption by data analysts or scientists.

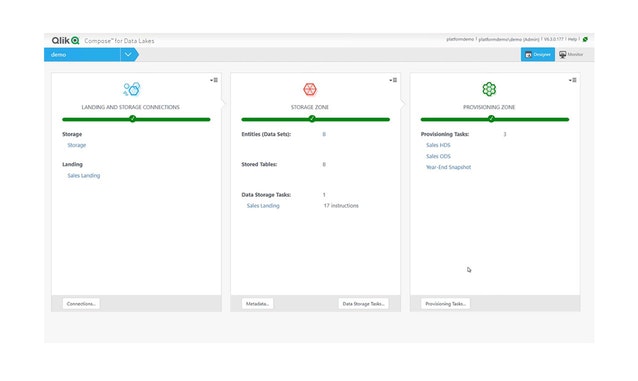

Automated data pipeline

Realize faster value by automating data ingestion, target schema creation, and continuous data updates to zones.

- Data Pipeline Designer – The point and click designer automatically generates transformation logic and pushes it to task engines for execution.

- Hive or Spark Task Engines – Run transformation tasks as a single, end-to-end process on either Hive or Spark engines.

- Historical Data Store - Standardizes and combines multiple change streams into a single historical data store ready for downstream processing.

- Data Set Provisioning – Easily create analytics-ready data subsets for analysts or further downstream processing.

- Multiple Export Formats – Data sets can be exported in several formats including ORC, AVRO and Parquet.

Continuous streaming data

Change data capture (CDC) technology delivers only committed changes made to your enterprise data sources to your data lake without imposing additional overhead on the source system or data lake infrastructure.

- Universal Connectivity - Supports all major data sources including relational databases, mainframes, SAP, data streaming solutions, enterprise data warehouses, big data technologies, and cloud infrastructures such as Amazon Web Services, Microsoft Azure and Google Cloud Platform.

- No Coding, Simple GUI - Use an intuitive interface to quickly and easily configure data feeds.

- High Performance and Scalable - Ingest data at high speeds with near linear scalability from hundreds to thousands of data sources.

- Agentless Architecture - Log-based, agentless CDC reduces the burden of administration and eliminates the source system processing penalty.

- Real-time Data Updates - Continuously ingest data with enterprise-class change data capture (CDC) that immediately delivers updates, with virtually no latency.

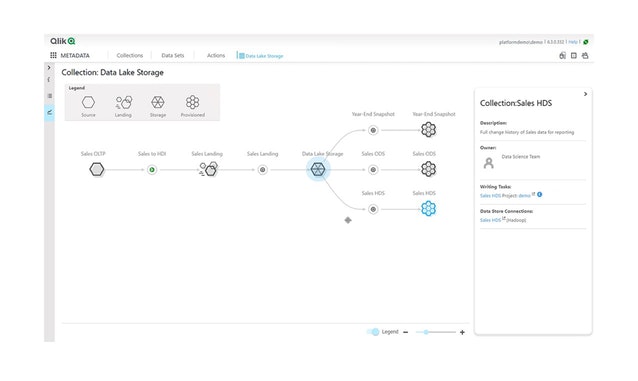

Centralized metadata integration

Understand, utilize and trust data flows with the help of a centralized metadata repository.

- Data Catalog - Automatically collect metadata from source and target systems.

- Data Profiling – Generate summaries and detailed reports of the data attributes in the data lake and pipeline.

- Data Lineage – Highlight data provenance and the downstream impact of data changes.

- Metadata Directory Interoperability – Synchronize metadata with leading metadata repositories such as Apache Atlas.

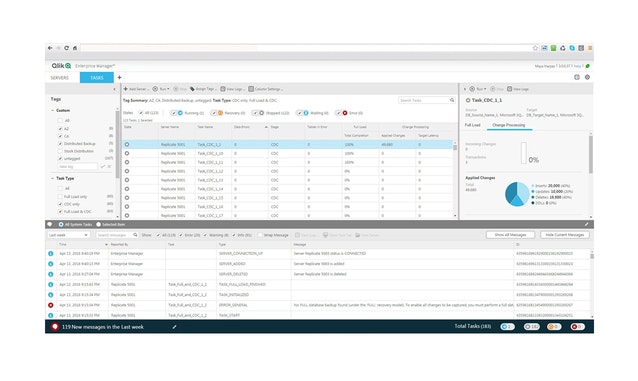

Enterprise-grade administration and management

The central command center helps you configure, execute and monitor data pipelines across the enterprise.

- Configurable Dashboard Views - Group tasks by server, data source or target, application or physical location.

- KPI Alerting - Monitor hundreds of data flow tasks in real-time through KPIs and alerts.

- Search and Filter – Gain insights by searching and filtering tasks by data replication status and system operation.

- Granular Access Control - Leverage role-based access control for policy-based management of user views and actions.

- Programmable Integration - Integrate with enterprise dashboards via REST and .NET interfaces.

Learn more about data ingestion for Hadoop data lakes

Accelerate data ingestion at scale from many sources into your data lake. Qlik’s easy and scalable data ingestion platform supports many source database systems, delivering data efficiently with high performance to different types of data lakes.

-

Whitepaper

Five Principles for Effectively Managing Your Data Lake Pipeline

-

Data Sheet

Data Integration Solution for Streaming Data Pipelines

-

Video

Qlik for Data Lakes Platform Demonstration